Protecting Android with more Linux kernel defenses

Android Android Developer Android N SecurityPosted by Jeff Vander Stoep, Android Security team

Android relies heavily on the Linux kernel for enforcement of its security

model. To better protect the kernel, we’ve enabled a number of mechanisms within

Android. At a high level these protections are grouped into two

categories—memory protections and attack surface reduction.

Memory protections

One of the major security features provided by the kernel is memory protection

for userspace processes in the form of address space separation. Unlike

userspace processes, the kernel’s various tasks live within one address space

and a vulnerability anywhere in the kernel can potentially impact unrelated

portions of the system’s memory. Kernel memory protections are designed to

maintain the integrity of the kernel in spite of vulnerabilities.

Mark memory as read-only/no-execute

This feature segments kernel memory into logical sections and sets restrictive

page access permissions on each section. Code is marked as read only + execute.

Data sections are marked as no-execute and further segmented into read-only and

read-write sections. This feature is enabled with config option

CONFIG_DEBUG_RODATA. It was put together by Kees Cook and is based on a subset

of Grsecurity’s KERNEXEC feature by Brad

Spengler and Qualcomm’s CONFIG_STRICT_MEMORY_RWX feature by Larry Bassel and

Laura Abbott. CONFIG_DEBUG_RODATA landed in the upstream kernel for arm/arm64

and has been backported to Android’s 3.18+ arm/href="https://android-review.googlesource.com/#/c/174947/">arm64 common

kernel.

Restrict kernel access to userspace

This feature improves protection of the kernel by preventing it from directly

accessing userspace memory. This can make a number of attacks more difficult

because attackers have significantly less control over kernel memory

that is executable, particularly with CONFIG_DEBUG_RODATA enabled. Similar

features were already in existence, the earliest being Grsecurity’s UDEREF. This

feature is enabled with config option CONFIG_CPU_SW_DOMAIN_PAN and was

implemented by Russell King for ARMv7 and backported to href="https://android-review.googlesource.com/#/q/topic:sw_PAN">Android’s

4.1 kernel by Kees Cook.

Improve protection against stack buffer overflows

Much like its predecessor, stack-protector, stack-protector-strong protects

against stack

buffer overflows, but additionally provides coverage for href="https://outflux.net/blog/archives/2014/01/27/fstack-protector-strong/">more

array types, as the original only protected character arrays.

Stack-protector-strong was implemented by Han Shen and href="https://gcc.gnu.org/ml/gcc-patches/2012-06/msg00974.html">added to the gcc

4.9 compiler.

Attack surface reduction

Attack surface reduction attempts to expose fewer entry points to the kernel

without breaking legitimate functionality. Reducing attack surface can include

removing code, removing access to entry points, or selectively exposing

features.

Remove default access to debug features

The kernel’s perf system provides infrastructure for performance measurement and

can be used for analyzing both the kernel and userspace applications. Perf is a

valuable tool for developers, but adds unnecessary attack surface for the vast

majority of Android users. In Android Nougat, access to perf will be blocked by

default. Developers may still access perf by enabling developer settings and

using adb to set a property: “adb shell setprop security.perf_harden 0”.

The patchset for blocking access to perf may be broken down into kernel and

userspace sections. The href="https://android-review.googlesource.com/#/c/234573/">kernel patch is

by Ben Hutchings and is

derived from Grsecurity’s CONFIG_GRKERNSEC_PERF_HARDEN by Brad Spengler. The

userspace changes were href="https://android-review.googlesource.com/#/q/topic:perf_harden">contributed

by Daniel Micay. Thanks to href="https://conference.hitb.org/hitbsecconf2016ams/sessions/perf-from-profiling-to-kernel-exploiting/">Wish

Wu and others for responsibly disclosing security vulnerabilities in perf.

Restrict app access to ioctl commands

Much of Android security model is described and enforced by SELinux. The ioctl()

syscall represented a major gap in the granularity of enforcement via SELinux.

Ioctl command

whitelisting with SELinux was added as a means to provide per-command

control over the ioctl syscall by SELinux.

Most of the kernel vulnerabilities reported on Android occur in drivers and are

reached using the ioctl syscall, for example href="https://source.android.com/security/bulletin/2016-03-01.html#elevation_of_privilege_vulnerability_in_mediatek_wi-fi_kernel_driver">CVE-2016-0820.

Some ioctl commands are needed by third-party applications, however most are not

and access can be restricted without breaking legitimate functionality. In

Android Nougat, only a small whitelist of socket ioctl commands are available to

applications. For select devices, applications’ access to GPU ioctls has been

similarly restricted.

Require seccomp-bpf

Seccomp provides an additional sandboxing mechanism allowing a process to

restrict the syscalls and syscall arguments available using a configurable

filter. Restricting the availability of syscalls can dramatically cut down on

the exposed attack surface of the kernel. Since seccomp was first introduced on

Nexus devices in Lollipop, its availability across the Android ecosystem has

steadily improved. With Android Nougat, seccomp support is a requirement for all

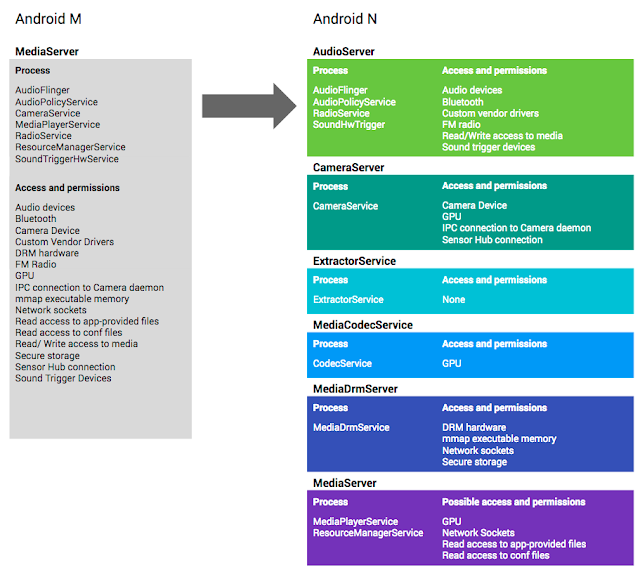

devices. On Android Nougat we are using seccomp on the mediaextractor and

mediacodec processes as part of the href="http://android-developers.blogspot.com/2016/05/hardening-media-stack.html">media

hardening effort.

Ongoing efforts

There are other projects underway aimed at protecting the kernel:

- The href="http://kernsec.org/wiki/index.php/Kernel_Self_Protection_Project">Kernel

Self Protection Project is developing runtime and compiler defenses for the

upstream kernel. - Further sandbox tightening and attack surface reduction with SELinux is

ongoing in AOSP. - href="https://www.chromium.org/chromium-os/developer-guide/chromium-os-sandboxing#h.l7ou90opzirq">Minijail

provides a convenient mechanism for applying many containment and sandboxing

features offered by the kernel, including seccomp filters and namespaces. - Projects like href="https://www.kernel.org/doc/Documentation/kasan.txt">kasan and href="https://www.kernel.org/doc/Documentation/kcov.txt">kcov help fuzzers

discover the root cause of crashes and to intelligently construct test cases

that increase code coverage—ultimately resulting in a more efficient bug hunting

process.

Due to these efforts and others, we expect the security of the kernel to

continue improving. As always, we appreciate feedback on our work and welcome

suggestions for how we can improve Android. Contact us at href="mailto:security@android.com">security@android.com.